|

Brown University |

Brown University |

Brown University |

|

Google Research |

Google Research |

Brown University |

Brown University |

|

|

|

|

Reinforcement learning via sequence modeling aims to mimic the success of language models, where actions taken by an embodied agent are modeled as tokens to predict. Despite their promising performance, it remains unclear if embodied sequence modeling leads to the emergence of internal representations that represent the environmental state information. A model that lacks abstract state representations would be liable to make decisions based on surface statistics which fail to generalize. We take the BabyAI environment, a grid world in which language-conditioned navigation tasks are performed, and build a sequence modeling Transformer, which takes a language instruction, a sequence of actions, and environmental observations as its inputs. In order to investigate the emergence of abstract state representations, we design a "blindfolded" navigation task, where only the initial environmental layout, the language instruction, and the action sequence to complete the task are available for training.

Our probing results show that intermediate environmental layouts can be reasonably reconstructed from the internal activations of a trained model, and that language instructions play a role on the construction accuracy. Our results suggest that many key features of state representations can emerge via embodied sequence modeling, supporting an optimistic outlook for applications of sequence modeling objectives to more complex embodied decision-making domains. |

|

|

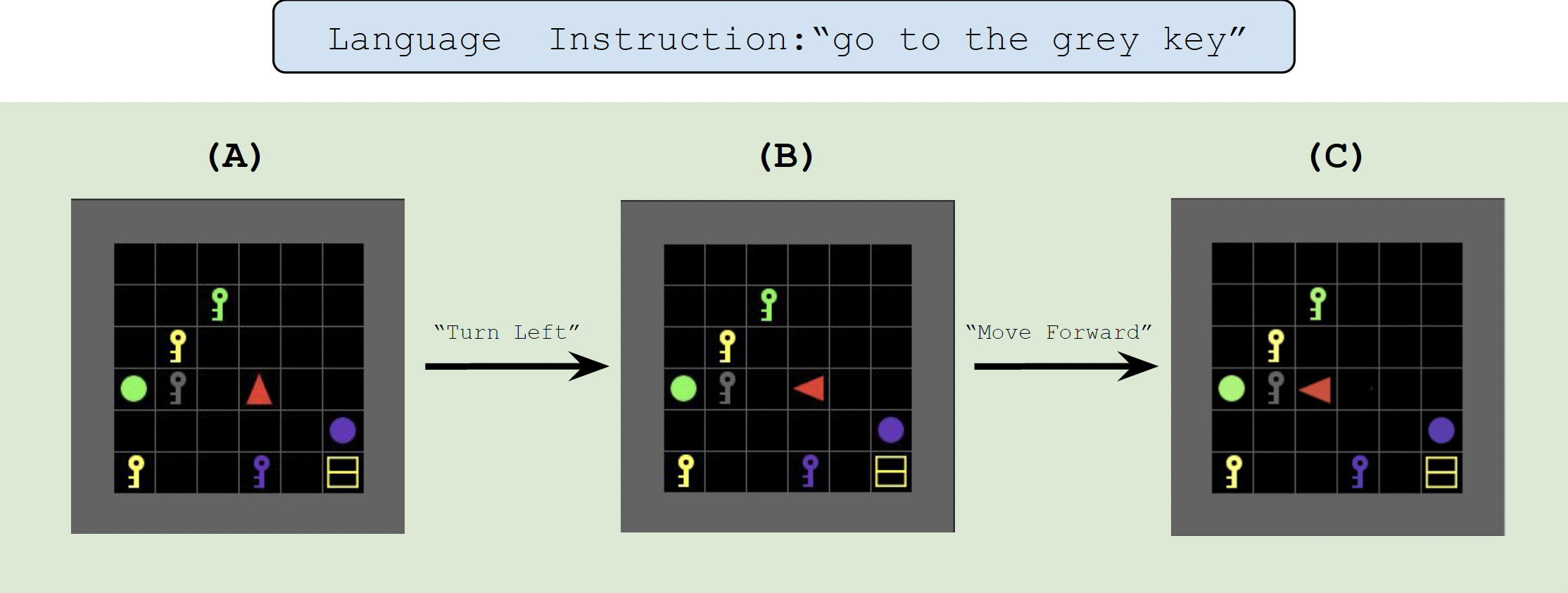

We use the BabyAI environment as a testbed to investigate whether embodied sequence modeling leads to the emergence of abstract internal representations of the environment. The goal in this environment is to control an agent to navigate and interact in an N by N grid to follow the given language instructions (e.g., "go to the yellow key"). Above is a demonstration of a language-conditioned navigation task in BabyAI GoToLocal level where an agent (i.e., red triangle) needs to navigate to the target objects specified in the given instructions. This is an example trajectory where the agent achieves the goal of "go to the grey key":

|

|

|

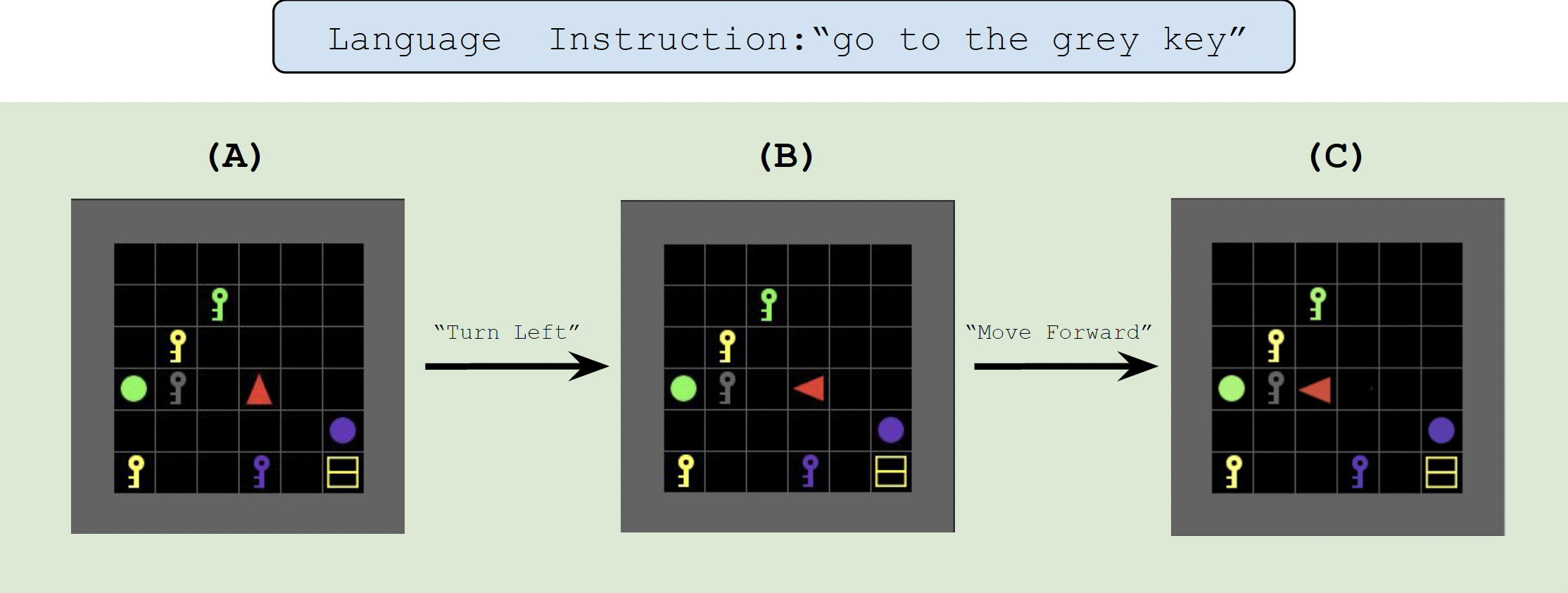

We build our embodied sequence modeling framework with a Transformer-based architecture, in which we can process heterogeneous data (e.g. natural language instructions, states and actions) as sequences and autoregressively model the state-action trajectories with causal attention masks.

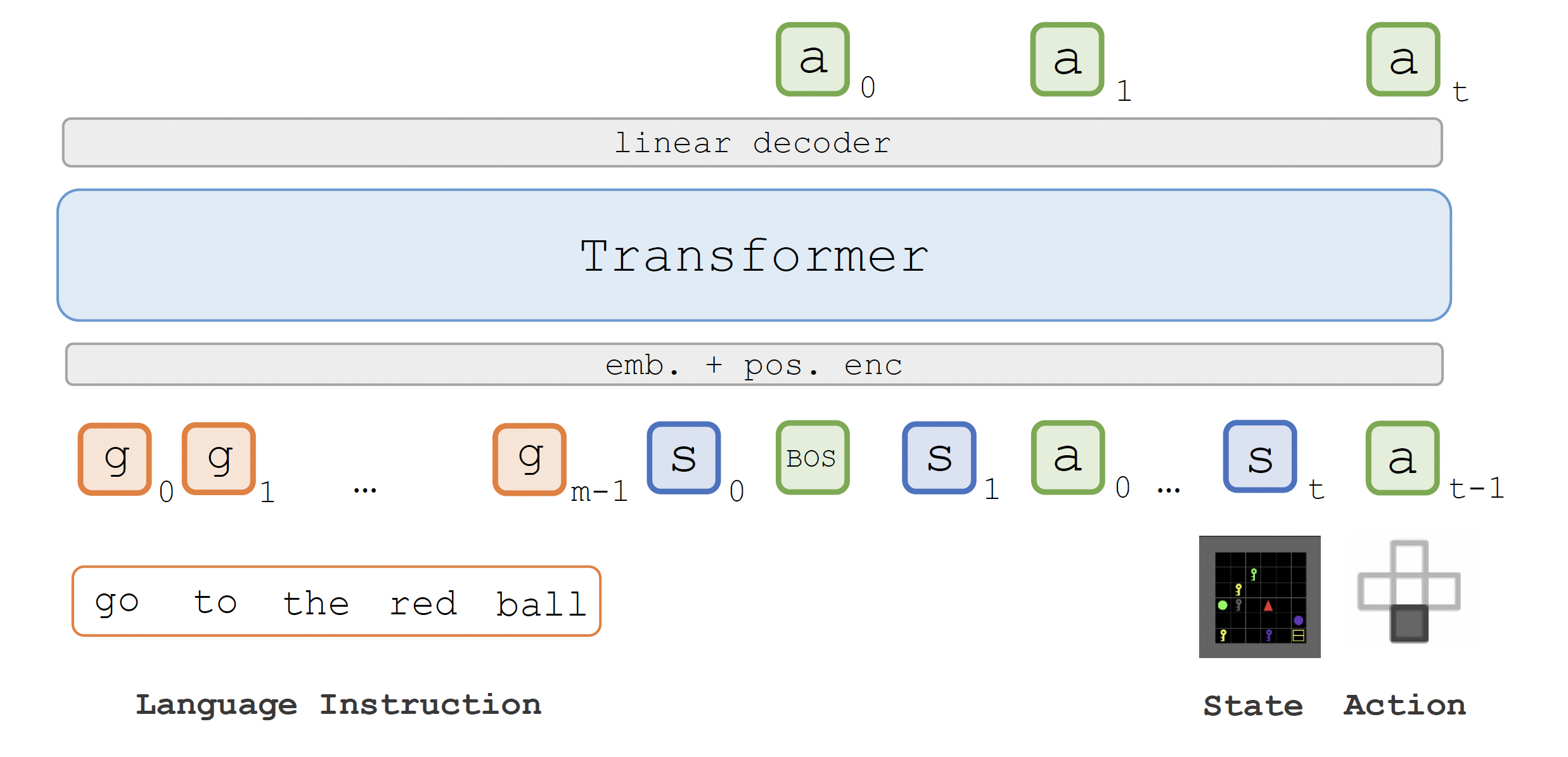

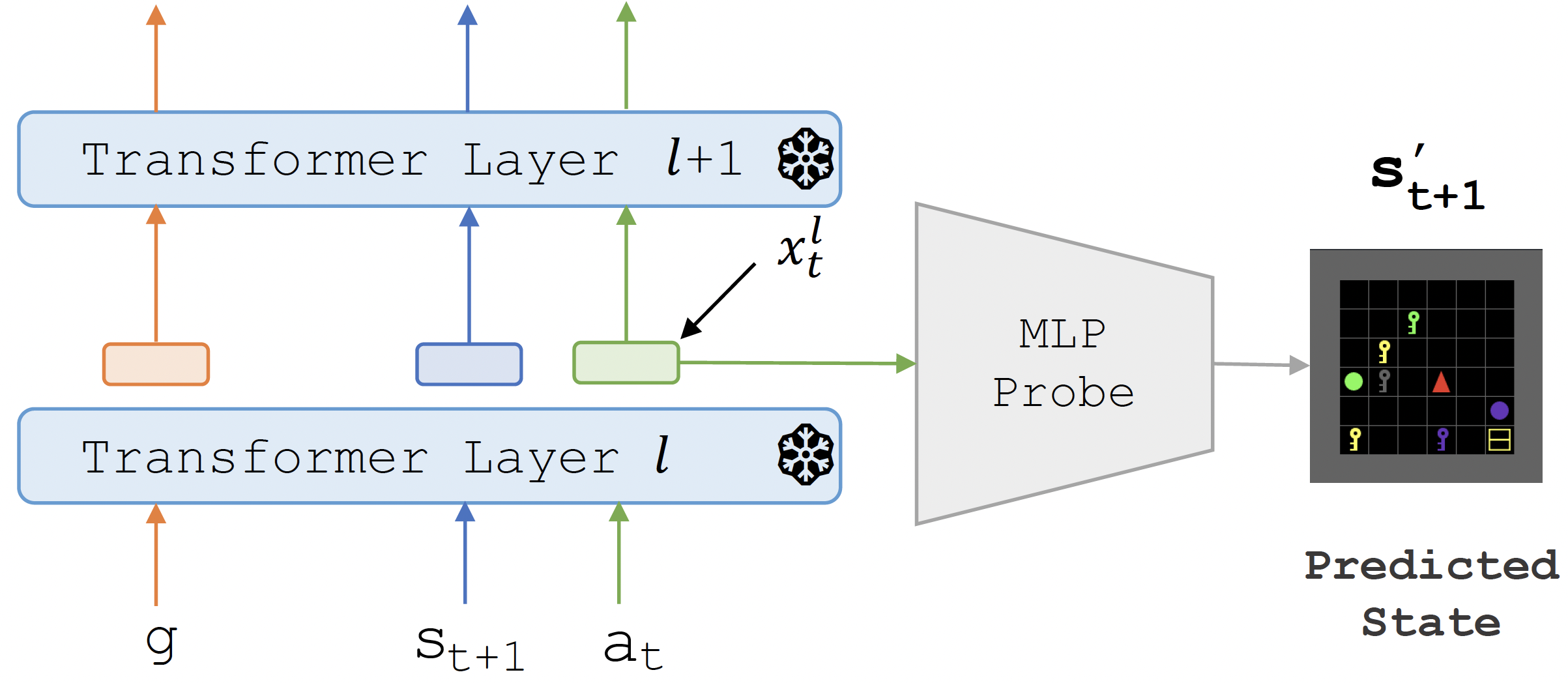

Once we have models trained to perform a language-conditioned navigation task, we train simple classifiers as probes to explore whether the internal activations of a trained model contain representations of abstract environmental states (e.g., current board layouts). If the trained probes can accurately reconstruct the current state when it is not explicitly given to the model, it suggests that abstract state representations can emerge during model's pretraining. We introduce two sequence modeling setups in BabyAI, namely the Regular setup and the Blindfolded setup.

|

|

We train a sequence model which simulates the regular setup and blindfolded setup of sequence modeling in BabyAI, named as Complete-State Model and Missing-State Model respectively.

Randomly initialized baseline is a probe trained on a sequence model with random weights. Initial state baseline to always predict the initial state, without considering the actions taken by the agent. |

| 1. Role of Intermediate State Observations in the Emergence of State Representations |

|

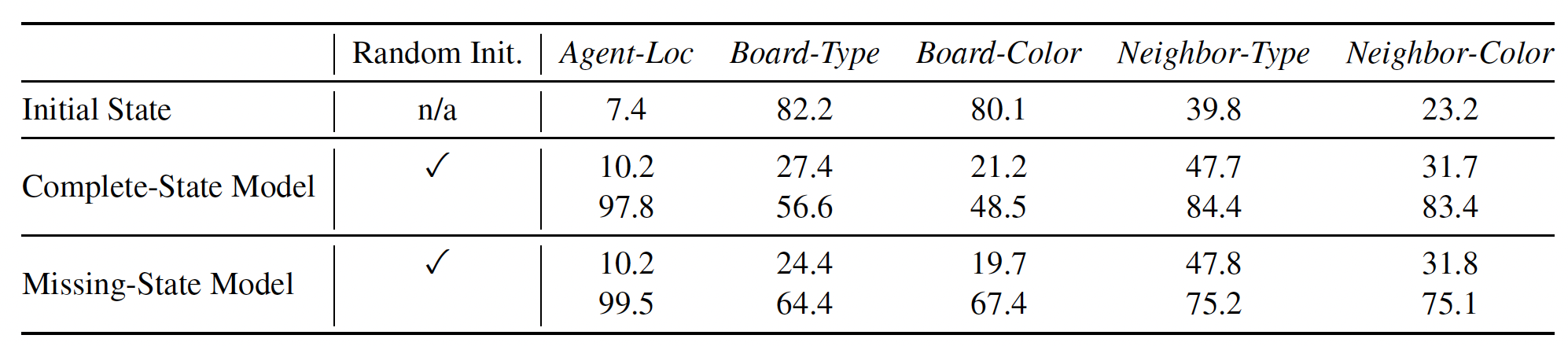

| We perform probing on the internal activations of a pretrained Complete-State model and a pretrained Missing-State model. For Complete-State model, while the current state is explicitly fed to the model as part of its input, the poor performance of the randomly initialized weights confirms that this information is not trivially preserved in its internal activations - the model needs to be properly pretrained for that to happen. For Missing-State model, even though the current states are not explicitly given, they can be reconstructed reasonably well from the model's internal representations. |

| 2. Role of Language Instructions in the Emergence of State Representations |

|

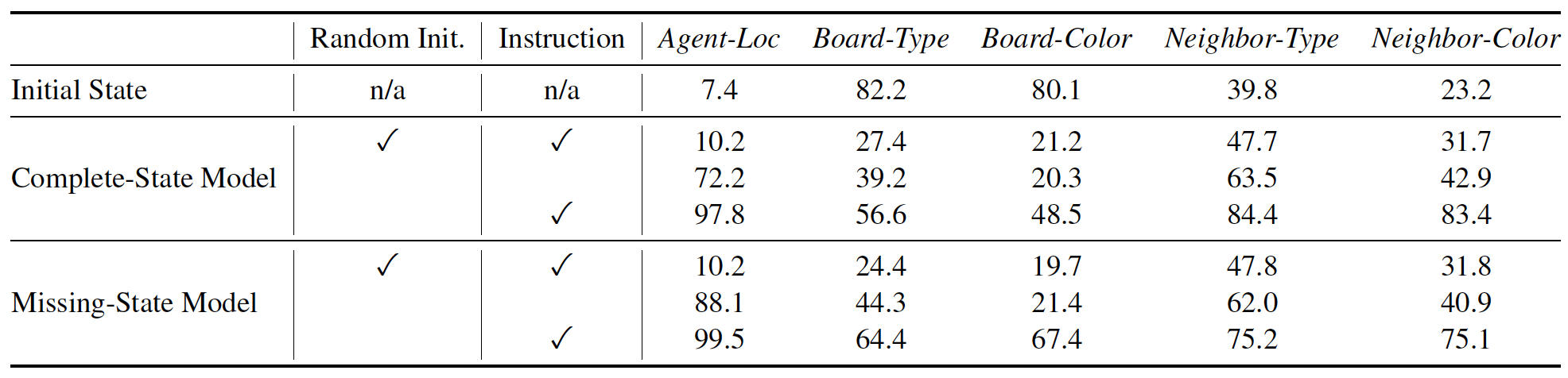

| To study how language instructions impact the emergence of internal representations of states, we pretrain a Complete-State model and a Missing-State model without passing in language instructions. When the language instruction is absent during pretraining, the models struggle to recover state information as accurately as the models trained with language instructions, which reflects that language instructions play an important role in the emergence of internal state representations. |

| 3. Blindfolded Navigation Performance |

|

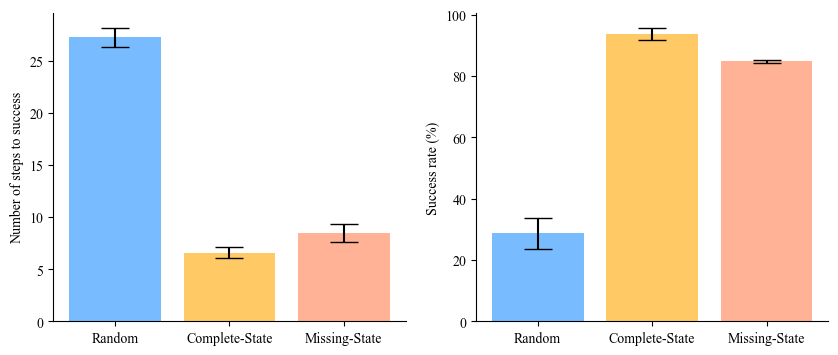

| We further explore the practical implications of the emergence of internal state representations by evaluating pretrained Complete- and Missing-State models on GoToLocal. We observe that Missing-State model performs competitively against Complete-State model, even though Missing-State model only has access to the non-initial/last states during its training. This aligns with our expectation that a model which learns internal representations of states can utilize such representations to predict next actions. |

|

Tian Yun*, Zilai Zeng*, Kunal Handa, Ashish V. Thapliyal, Bo Pang, Ellie Pavlick, Chen Sun. Emergence of Abstract State Representations in Embodied Sequence Modeling In EMNLP 2023. |

|

AcknowledgementsThis template was originally made by Phillip Isola and Richard Zhang for a colorful ECCV project; the code can be found here. |